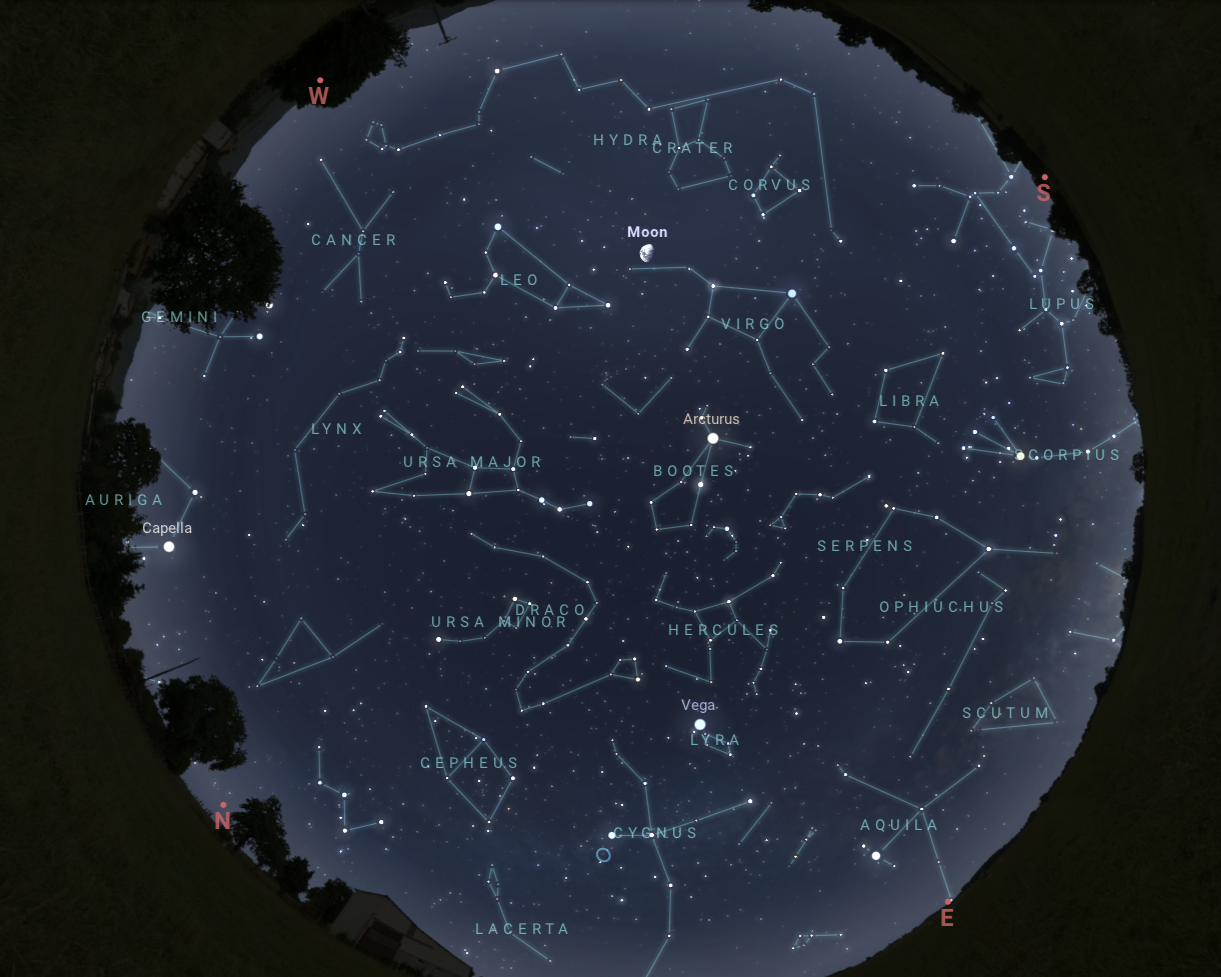

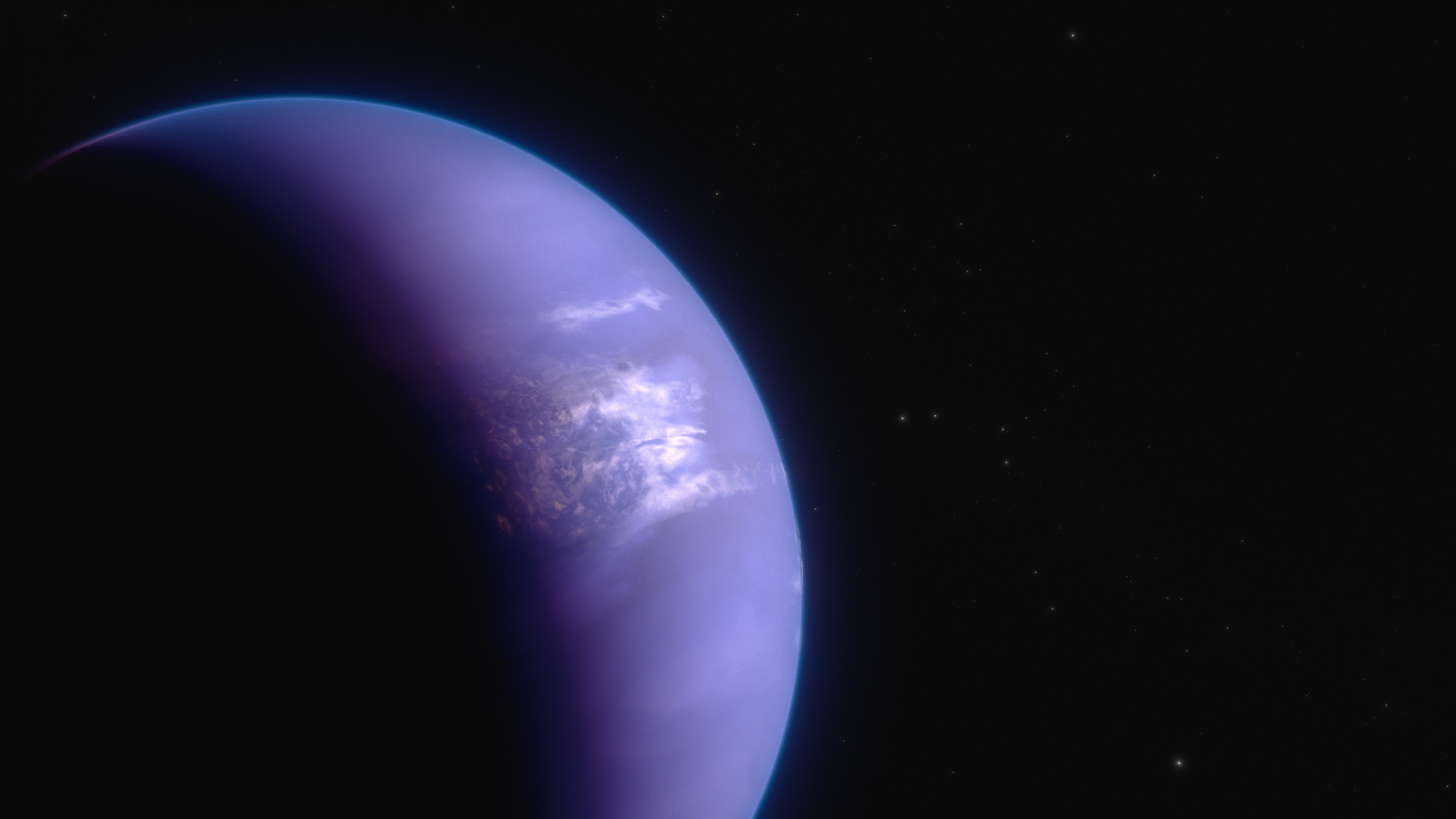

Using a customized, 3D virtual reality (VR) simulation that animated the speed and direction of 4 million stars in the local Milky Way neighborhood, astronomer Marc Kuchner and researcher Susan Higashio obtained a new perspective on the stars’ motions, improving our understanding of star groupings.

NASA scientists using virtual reality technology are redefining our understanding about how our galaxy works.

Using a customized, 3D virtual reality (VR) simulation that animated the speed and direction of 4 million stars in the local Milky Way neighborhood, astronomer Marc Kuchner and researcher Susan Higashio obtained a new perspective on the stars’ motions, improving our understanding of star groupings.

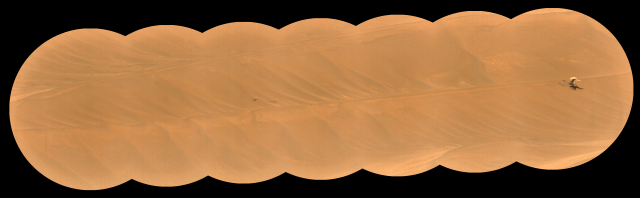

Astronomers have come to different conclusions about the same groups of stars from studying them in six dimensions using paper graphs, Higashio said. Groups of stars moving together indicate to astronomers that they originated at the same time and place, from the same cosmic event, which can help us understand how our galaxy evolved.

Goddard’s virtual reality team, managed by Thomas Grubb, animated those same stars, revolutionizing the classification process and making the groupings easier to see, Higashio said. They found stars that may have been classified into the wrong groups as well as star groups that could belong to larger groupings.

Kuchner presented the findings at the annual American Geophysical Union (AGU) conference in early December 2019. Kuchner and Higashio, both at NASA’s Goddard Space Flight Center in Greenbelt, Maryland, plan to publish a paper on their findings next year, along with engineer Matthew Brandt, the architect for the PointCloudsVR simulation they used.

“Rather than look up one database and then another database, why not fly there and look at them all together,” Higashio said. She watched these simulations hundreds, maybe thousands of times, and said the associations between the groups of stars became more intuitive inside the artificial cosmos found within the VR headset. Observing stars in VR will redefine astronomer’s understanding of some individual stars as well as star groupings.

The 3D visualization helped her and Kuchner understand how the local stellar neighborhood formed, opening a window into the past, Kuchner said. “We often find groups of young stars moving together, suggesting that they all formed at the same time,” Kuchner said. “The thinking is they represent a star-formation event. They were all formed in the same place at the same time and so they move together.”

“Planetariums are uploading all the databases they can get their hands on and they take people through the cosmos,” Kuchner added. “Well, I’m not going to build a planetarium in my office, but I can put on a headset and I’m there.”

Realizing a Vision

The discovery realized a vision for Goddard Chief Technologist Peter Hughes, who saw the potential of VR to aid in scientific discovery when he began funding engineer Thomas Grubb’s VR project more than three years ago under the center’s Internal Research and Development (IRAD) program and NASA’s Center Innovation Fund [CuttingEdge, Summer 2017]. “All of our technologies enable the scientific exploration of our universe in some way,” Hughes said. “For us, scientific discovery is one of the most compelling reason to develop an AR/VR capability.”

The PointCloudsVR software has been officially released and open sourced on NASA’s Github site: https://github.com/nasa/PointCloudsVR

Scientific discovery isn’t the only beneficiary of Grubb’s lab.

The VR and augmented reality (AR) worlds can help engineers across NASA and beyond, Grubb said. VR puts the viewer inside a simulated world, while AR overlays computer-generated information onto the real world. Since the first “viable” headsets came on the market in 2016, Grubb said his team began developing solutions, like the star-tracking world Kuchner and Higashio explored, as well as virtual hands-on applications for engineers working on next-generation exploration and satellite servicing missions.

Engineering Applications

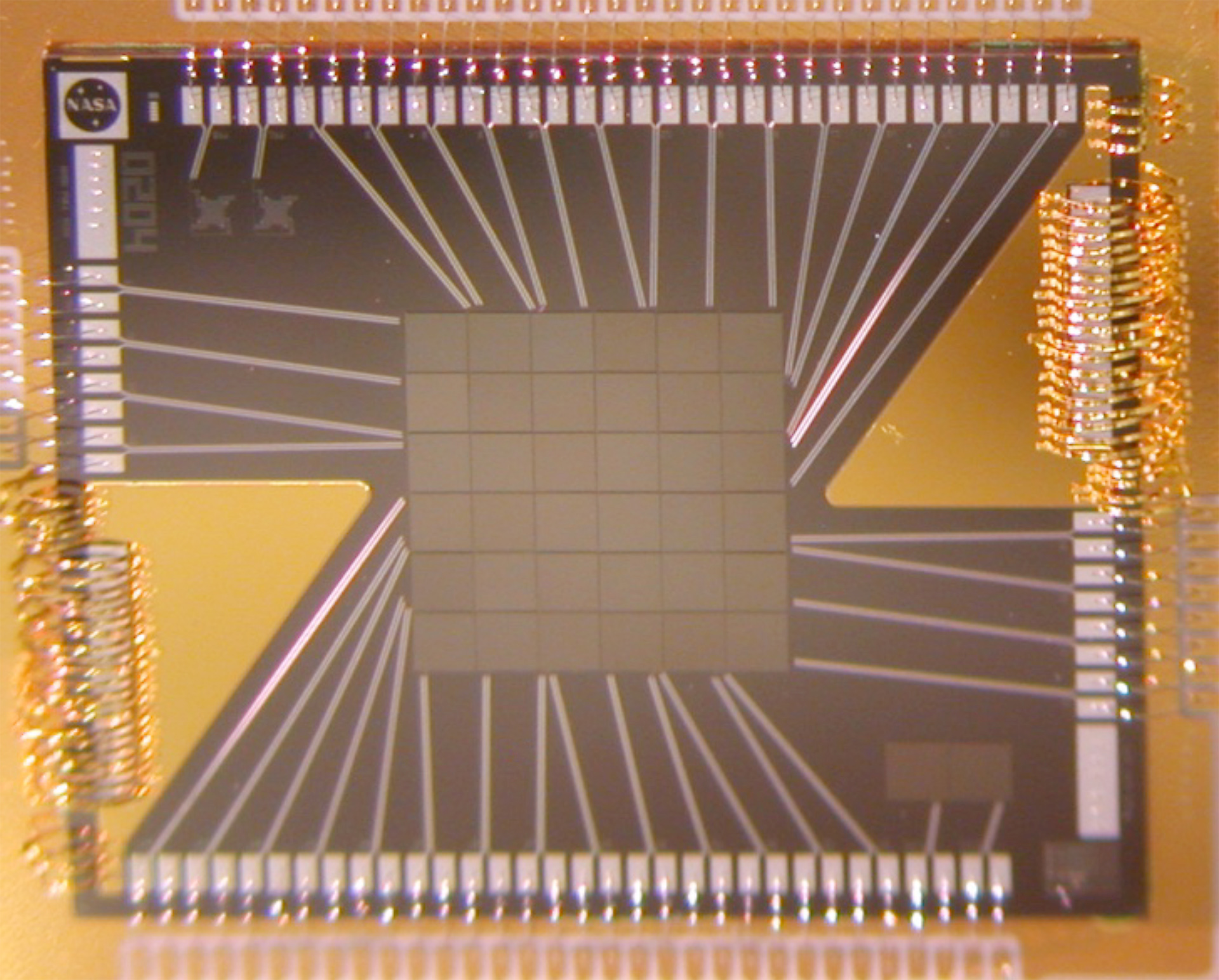

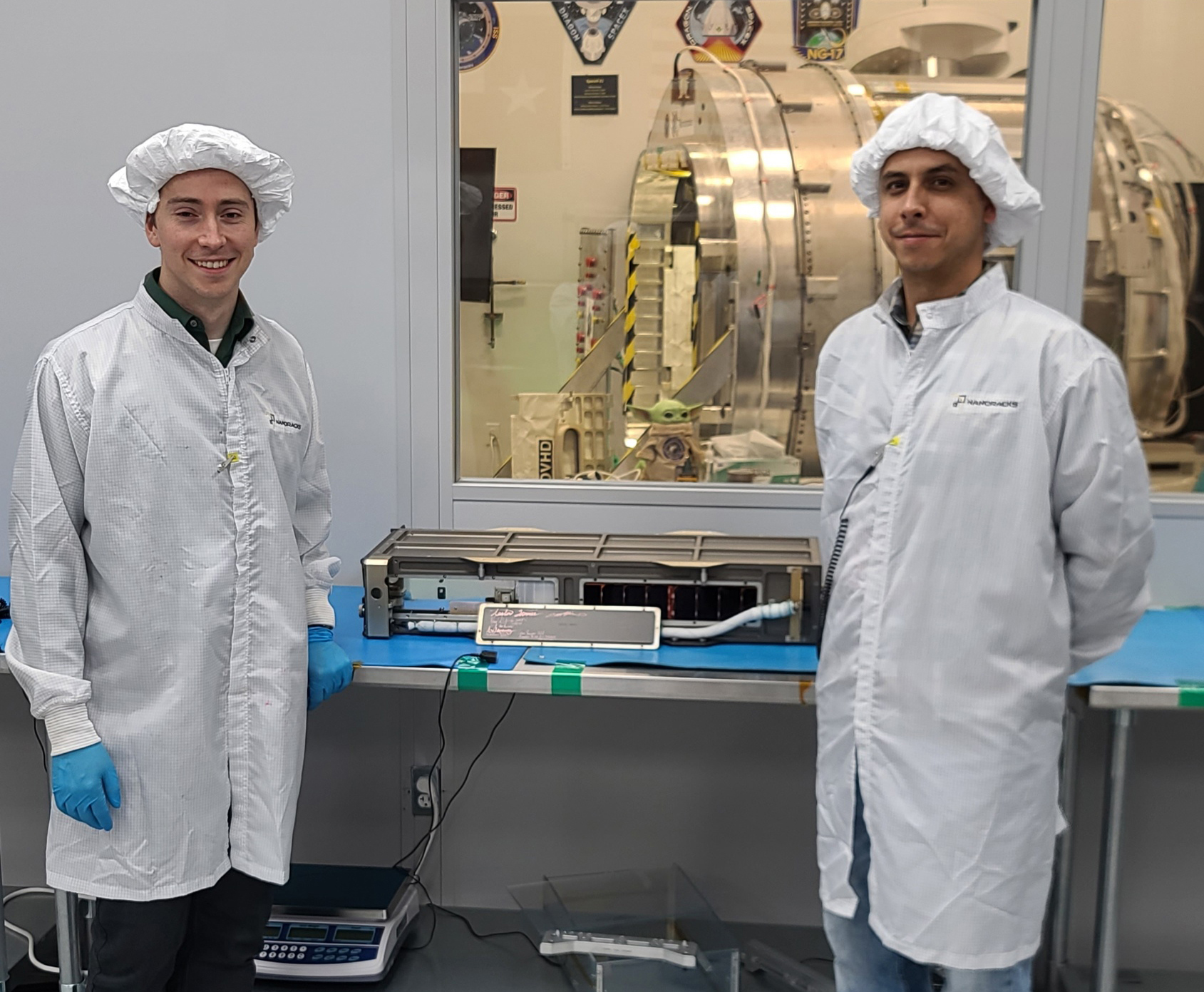

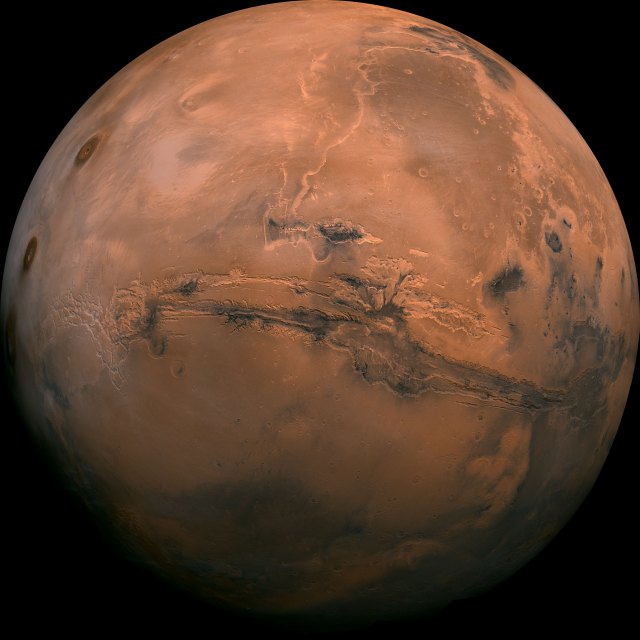

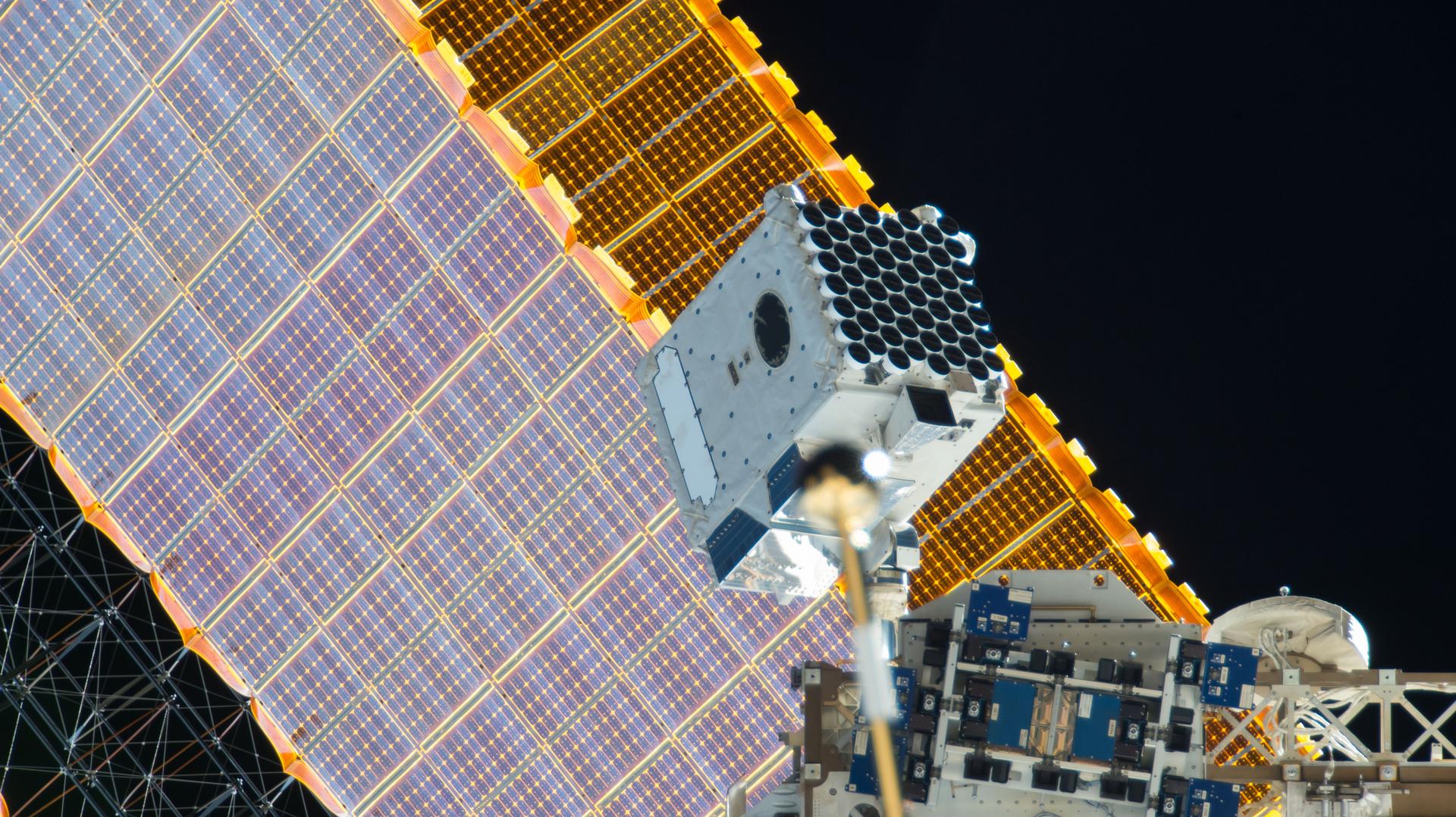

Grubb’s VR/AR team is now working to realize the first intra-agency virtual reality meet-ups, or design reviews, as well as supporting missions directly. His clients include the Restore-L project that is developing a suite of tools, technologies, and techniques needed to extend satellites’ lifespans, the Wide Field Infrared Survey Telescope (WFIRST) mission, and various planetary science projects.

“The hardware is here; the support is here,” Grubb said. “The software is lagging, as well as conventions on how to interact with the virtual world. You don’t have simple conventions like pinch and zoom or how every mouse works the same when you right click or left click.”

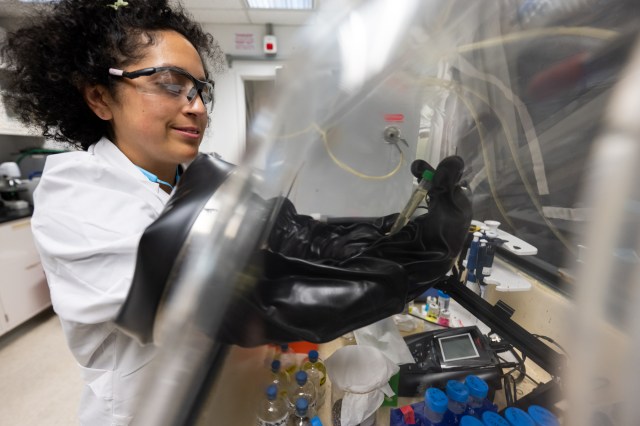

That’s where Grubb’s team comes in, he said. To overcome these usability issues, the team created a framework called the Mixed Reality Engineering Toolkit and is training groups on how to work with it. MRET, which is currently available for government agencies, assists in science-data analysis and enables VR-based engineering design: from concept designs for CubeSats to simulated hardware integration and testing for missions and in-orbit visualizations like the one for Restore-L.

For engineers and mission and spacecraft designers, VR offers cost savings in the design/build phase before they build physical mockups, Grubb said. “You still have to build mockups, but you can work out a lot of the iterations before you move to the physical model,” he said. “It’s not really sexy to the average person to talk about cable routing, but to an engineer, being able to do that in a virtual environment and know how much cabling you need and what the route looks like, that’s very exciting.”

In a mockup of the Restore-L spacecraft, for example, Grubb showed how the VR simulation would allow an engineer to “draw” a cable path through the instruments and components, and the software provides the cable length needed to follow that path. Tool paths to build, repair, and service hardware can also be worked out virtually, down to whether or not the tool will fit and be useable in confined spaces.

In addition, Grubb’s team worked with a team from NASA’s Langley Research Center in Hampton, Virginia, this past summer to work out how to interact with visualizations over NASA’s communication networks. This year, they plan to enable people at Goddard and Langley to fully interact with the visualization. “We’ll be in the same environment and when we point at or manipulate something in the environment, they’ll be able to see that,” Grubb said.

Augmented Science — a Better Future

For Kuchner and Higashio, the idea of being able to present their findings within a shared VR world was exciting. And like Grubb, Kuchner believes VR headsets will be a more common science tool in the future. “Why shouldn’t that be a research tool that’s on the desk of every astrophysicist,” he said. “I think it’s just a matter of time before this becomes commonplace.”

For more Goddard technology news, go to:

https://www.nasa.gov/wp-content/uploads/2023/03/winter-2020-final-web-version.pdf?emrc=497b53